The Union Minister for Electronics and IT stated that the future of AI will be shaped by SLMs rather than extremely Large Language Models (LLMs).

What are SLMs?

- SLMs are compact AI systems built on simpler neural network architectures, designed to generate and understand natural language, as LLMs do.

- Parameters used by SLMs: several million to 30 billion parameters, whereas LLMs often possess hundreds of billions or even trillions parameters.

- At present, nearly 95% of AI work globally is currently handled by SLMs. E.g. Llama, Mistral, Gemma and Granite etc.

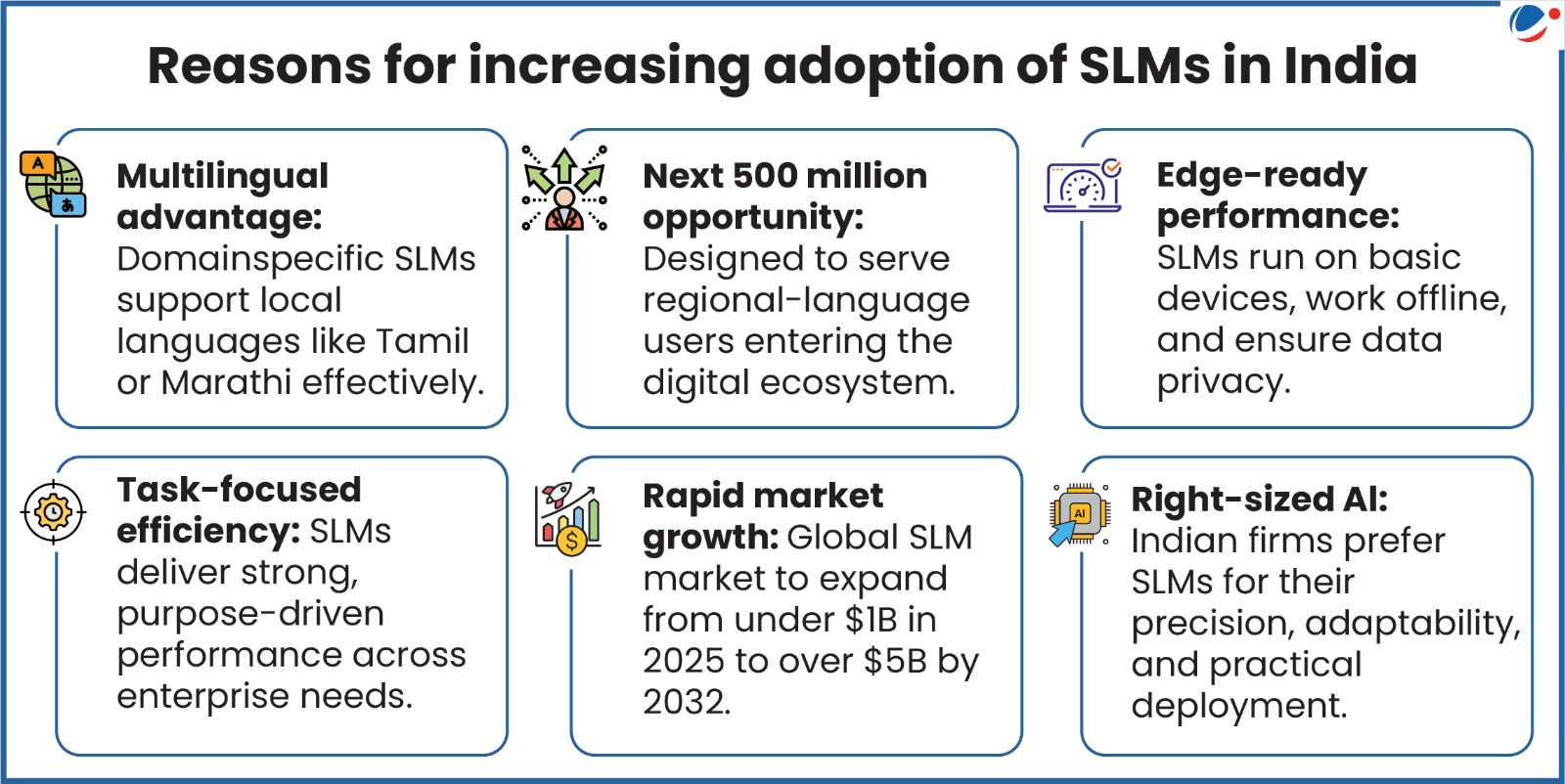

Advantages of SLMs over LLMs

- Cheaper: Smaller models typically require less computational power, reducing costs.

- Ideal for on-device deployment: As they are optimized for efficiency and performance on resource-constrained devices with limited connectivity, memory, and electricity.

- Democratization of AI: More organizations can participate in developing models with a more diverse range of perspectives and societal needs.

- Other: Streamlined monitoring and maintenance, Improved data privacy and security, Lower infrastructure, deeper expertise for domain-specific tasks, lower latency etc.

Limitations of SLMs

|