Why in the news?

Recently, experts have raised concerns regarding social media algorithms having the potential to amplify and spread extremism.

Understanding Social Media Algorithmic Amplification

- Social media algorithms: These are computerized rules that examine user behaviour and rank content based on interactive metrics such as likes, comments, shares, timelines etc.

- It uses machine learning models to make customized recommendations.

- It works as amplifiers because posts with higher engagement, shares, likes etc., alongwith hashtags, quickly tend to gain popularity and emerge as viral trends.

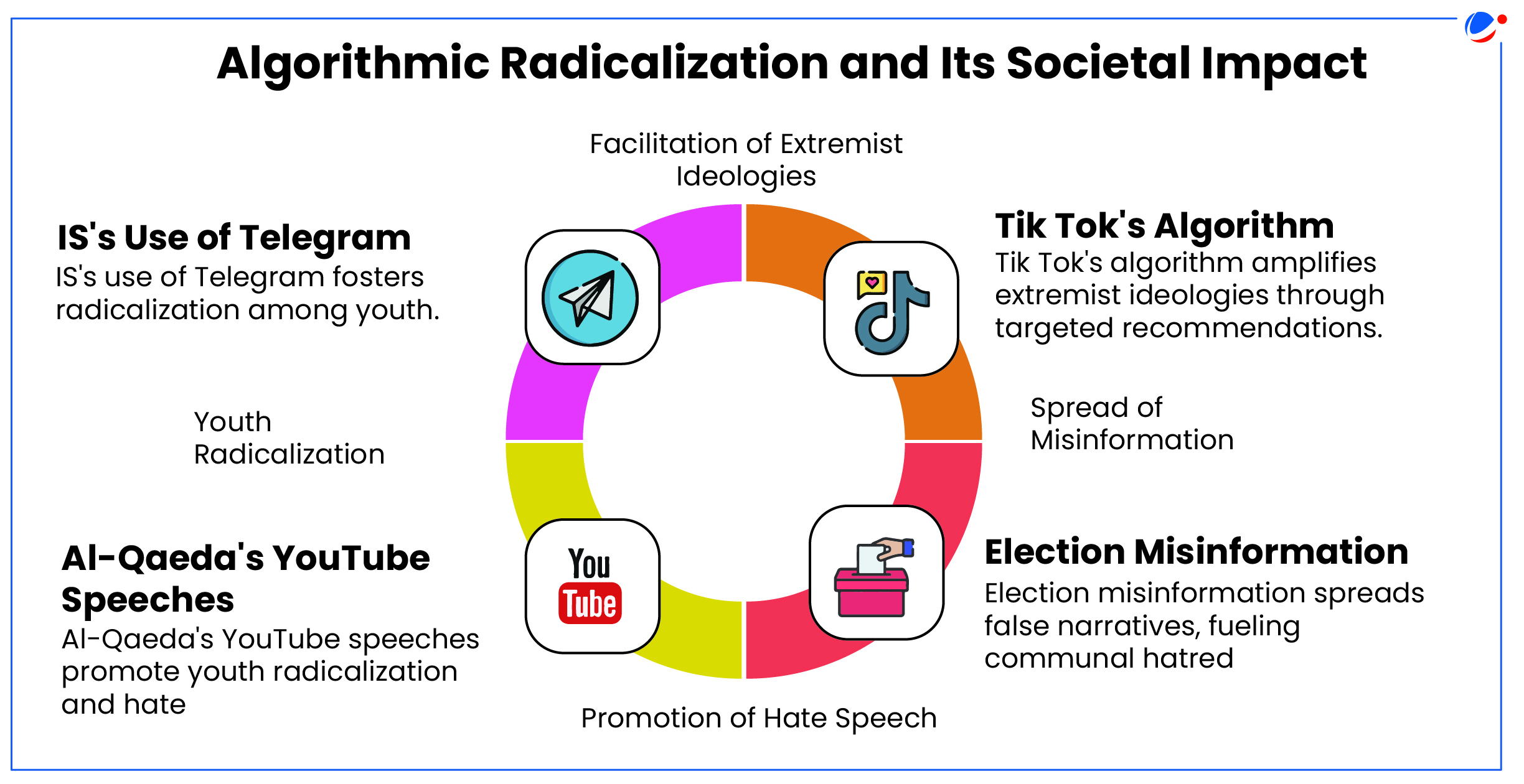

- Algorithmic Radicalisation: It is the idea that algorithms on social media platforms drive users towards progressively more extremist propaganda and polarizing narratives.

- It then influences their ideological stances, exacerbating societal divisions, promoting disinformation, bolstering influence of extremist groups etc.

- It reflects social media algorithms, which are intended to boost user interaction, inadvertently construct echo chambers and filter bubbles, confirming users' pre-existing beliefs, leading to confirmation bias, group polarization etc.

- It shows how social media platforms coax users into ideological rabbit holes and form their opinions through a discriminating content curation model.

Challenges in curbing Algorithmic Radicalization

- Complex mechanisms involved: The opacity of algorithms used in social media present challenges in addressing extremist contents.

- Social media algorithms work as 'black boxes', in which even some developers fully don't understand the underlying processes for recommending certain content.

- E.g., complexity of TikTok's "For You" page's operational mechanics, limits the mitigation of its algorithmic bias.

- Modulated content: Extremist groups change their radical contents to euphemisms or symbols to evade detection systems.

- E.g., IS and al-Qaeda uses coded language and satire to avoid detection.

- Moderation vs. free speech: Maintaining the right balance between effective content moderation and free speech is a complex issue.

- Extremist groups exploit this delicate balance by ensuring that their contents remain within the permissible limits of free speech, while still spreading divisive ideologies.

- Failure in accounting local context: Extremist contents are generated from the socio-political undercurrents in a specific country, and algorithms deployed globally often fail to account for these local socio-cultural contexts, exacerbating the problem.

- Lack of international regulation and cooperation: Countries primarily view radical activities from their national interest rather than from the perspective of global humanity.

Steps taken to curb Algorithmic RadicalisationGlobal steps

Indian steps

|

Way forward

- Algorithmic Audits: Regular algorithm audits should be mandatory to ensure transparency and fairness, similar to European Union's (EU's) Digital Services Act 2023.

- Accountability measures: Policymakers should clearly define the rules for algorithmic accountability, including penalties for platforms that fail to address the amplification of harmful content.

- E.g., Germany's Netz law imposes fines on social media platforms for not removing illegal content within 24 hours.

- Custom-made content moderation: Customized moderation policies (or algorithmic frameworks), tailored to localized contexts, can enhance the effectiveness of interventions to curb radicalisation spread by social media platforms.

- E.g., regulators in France partnered with social media companies to enhance their algorithms' ability to detect and moderate extremist content, considering various dialects spoken within the country.

- Public awareness: Government must conduct public awareness drives to help users identify propaganda and avoid engaging with extremist content.

- E.g., UK's Online Safety Bill contains provisions for public education initiatives to improve online media literacy.