LAWS is a class of advanced weapons that can identify and engage targets without human intervention.

- At present, no commonly agreed definition of Lethal Autonomous Weapon Systems (LAWS) exists.

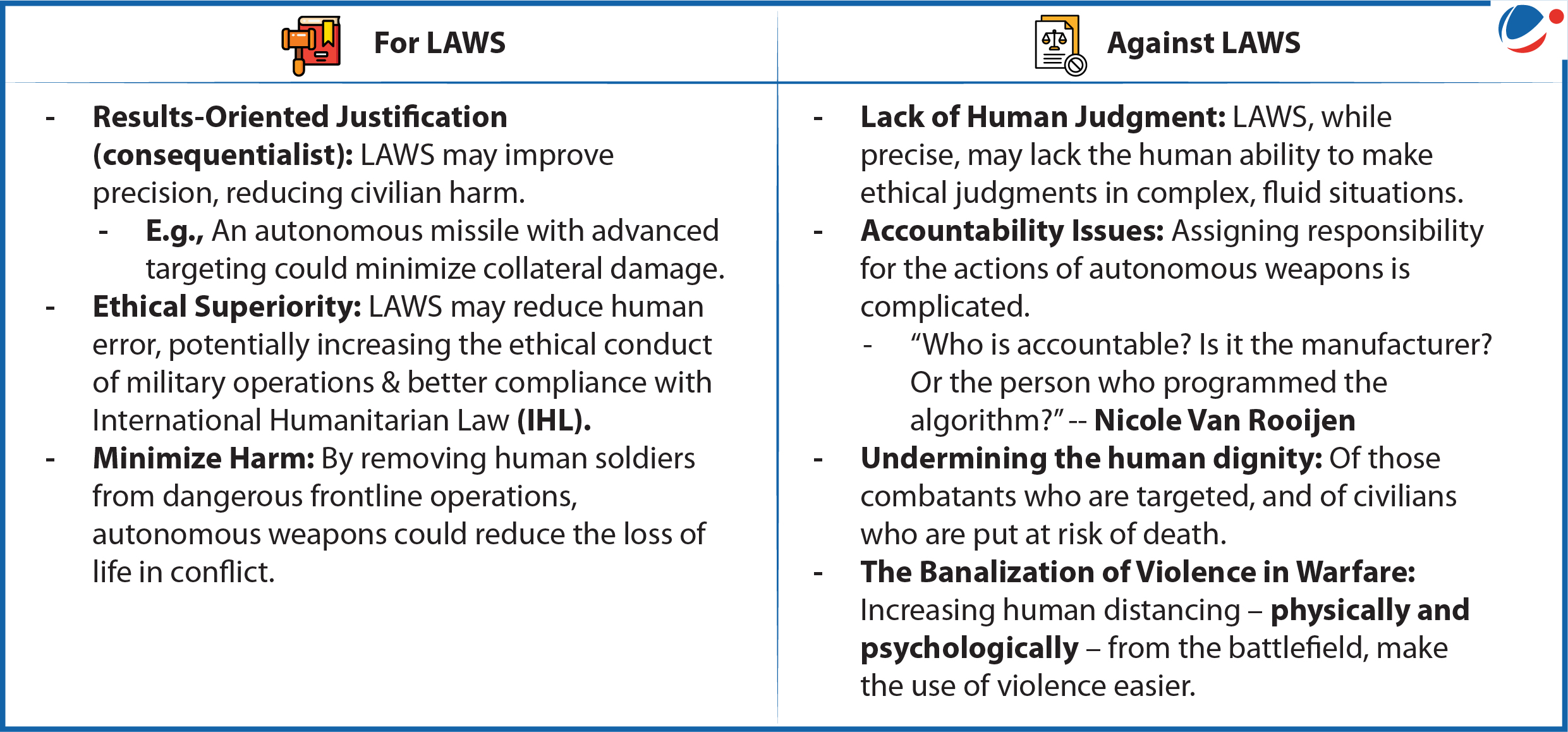

- Ethical Question: Can we ethically delegate life-and-death decisions to machines without compromising human moral agency and dignity?

The ethical debate on Lethal Autonomous Weapon Systems (LAWS)

Ethical Concerns in in human-machine (Lethal Autonomous Weapon Systems) Interactions

- Automation bias: Where humans place too much confidence in the operation of an autonomous machine.

- Surprises: Where a human is not fully aware of how a machine is functioning at the point s/he needs to take back control.

- Moral buffer: Where the human operator shifts moral responsibility and accountability to the machine as a perceived legitimate authority.