Why in the news?

The Council of Europe (COE) Framework Convention on Artificial Intelligence (AI) and human rights, democracy and the rule of law, the first legally binding international treaty on AI, was opened for signature.

More on the news

- COE is an intergovernmental organisation formed in 1949, with 46 members including Japan, and the U.S., plus countries of the EU bloc and others.

- The Framework Convention was initiated in 2019, when the ad hoc Committee on Artificial Intelligence (CAHAI) was tasked with examining the feasibility of such an instrument.

- It aligns with new regulations and agreements, including the G7 AI pact, Europe's AI Act, and the Bletchley Declaration.

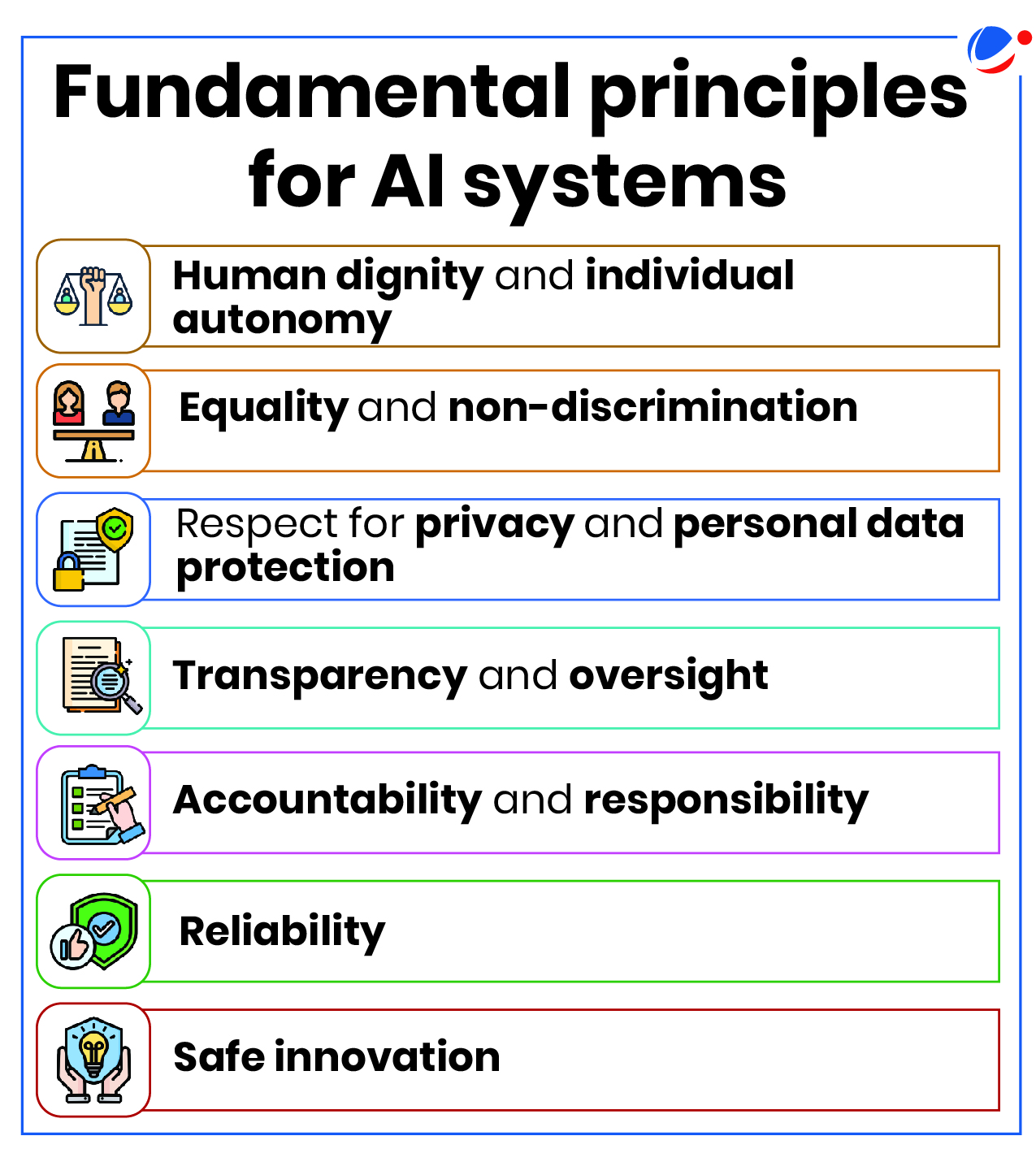

Key provision of the Framework Convention

|

What is the need for global AI governance?

- Risk Mitigation: Global governance can set standards to mitigate global risks associated with AI systems like job displacement, discrimination, misuse in surveillance and military applications, AI Arms Races etc.

- Threat to democratic functioning: E.g., disinformation and deep fakes can impact the integrity of democratic processes.

- Tackling Inequities: The global AI governance landscape is uneven, with many developing nations, particularly in the global South, lacking a voice in decision-making.

- Transboundary nature: Issues associated with AI systems like data privacy and security can impact multiple countries simultaneously.

- AI Misalignment: It happens when AI systems act in ways that do not reflect human intentions. Examples include unsafe medical recommendations, biased algorithms, and issues in content moderation.

- Wide scale deployment: AI systems are increasingly being adopted in decision-making in key sectors like healthcare, finance, and law enforcement.

What are the challenges in global AI governance?

- Representation Gap: AI governance initiatives largely lack representation, leading to significant gaps in national and regional efforts that affect AI assessment decisions and funding.

- E.g., many initiatives exclude entire regions, with seven countries participating in all and 118, mostly from the global South, entirely left out.

- Coordination Gap: Lack of Global Mechanisms for international standards and research initiatives, leads to issues like-

- Fragmentation, reduced interoperability and incompatibility between different plurilateral and regional AI governance

- Ad Hoc Responses to AI challenges.

- Narrow Focus, hindering their ability to tackle its complex global implications.

- Implementation Gap:

- Lack of stronger systems to hold governments and private companies accountable for their commitments regarding AI governance.

- Limited networking and resources in National strategies for AI development, leading to ineffective implementation.

- No Dedicated Funding Mechanism for AI capacity-building with the scale or authority.

Steps taken to regulate AIIn India

Global

|

Way forward

- Suggestions of UN report titled 'Governing AI for Humanity':

- Adoption of flexible, globally connected approach to AI governance that fosters shared understanding and benefits.

- Create an Independent international Scientific Panel on AI that consist of diverse experts who serve voluntarily.

- Establish a biannual policy dialogue on AI governance at UN meetings to engage government and stakeholders, focusing on best practices that promote AI development.

- Create an AI exchange that unites stakeholders to develop and maintain a register of definitions and standards for evaluating AI systems.

- Establish AI capacity development network that connects UN's affiliated centres to provide expertise and training to key stakeholders

- Global Fund for AI, an independently managed fund, can collect public and private contributions and distribute resources to enhance local access to AI tools.

- Other steps that can be taken:

- Formulating AI Law: MeitY is drafting a new law on artificial intelligence (AI) to harness its economic benefits while addressing potential risks and harms.

- Ensuring AI Alignment: AI alignment ensures that artificial intelligence systems operate according to human values and ethics and can address issues like discrimination and misinformation.