Why in the News?

Evaluating Trustworthy Artificial Intelligence (ETAI) Framework and Guidelines for the Indian Armed Forces were launched by the Chief of Defence Staff.

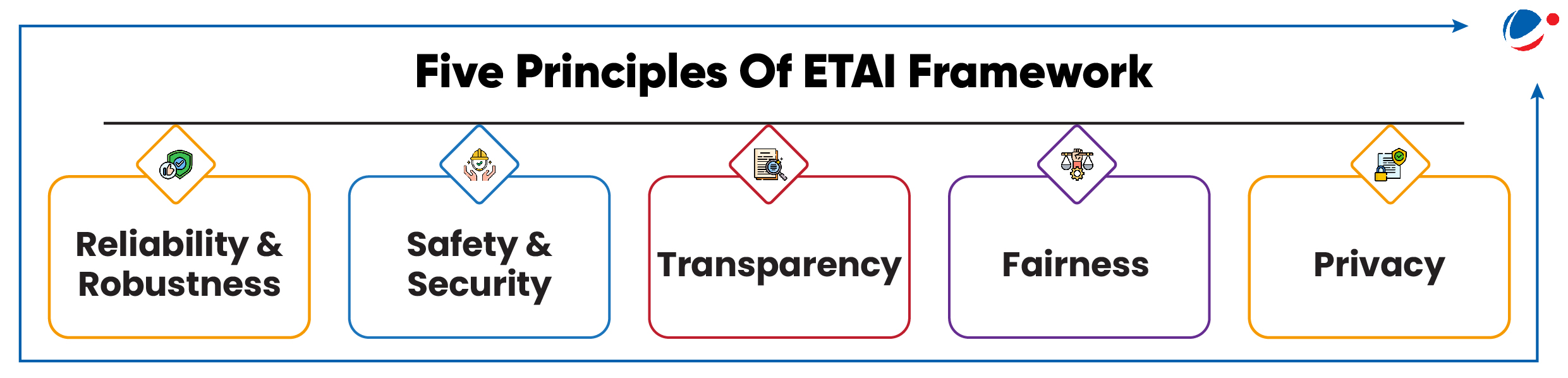

About Evaluating Trustworthy Artificial Intelligence (ETAI) Framework

- It is the risk-based assessment framework designed to ensure integration of reliable emerging technology into critical defence operations.

- It defines a comprehensive set of criteria for evaluating trustworthy AI and ETAI Guidelines provide specific measures to implement these criteria.

About AI and Defence

- AI systems have been used two ways as platforms providing supporting functions and offensive functions in the defence sector:

- Supporting functions such as intelligence, surveillance, navigation, and enhanced Command and Control (C2) capabilities.

- Offensive functions such as selecting targets, and carrying out strikes such as Drone Swarms, AI-driven hacking etc.

- AI also has the skills to study patterns of cyber-attacks and form protective strategies such as against Malware attack.

Significance of using AI in various aspects of Defence & Security

- Autonomous Weapons and Loitering Weapon Systems: It autonomously searches targets, identifies them and engages; allowing faster and more precise strikes. Eg. Israeli Harpy and Harop drones.

- Enhanced Target Recognition and Precision: To identify and engage specific military targets like missile systems avoiding civilian infrastructure if desired.

- E.g., The Iranian made Shahed-136 AI drones in the Russia-Ukraine war.

- Real-Time Data Analysis: To process huge data from surveillance systems in real-time, providing critical intelligence for battlefield decision-making.

- E.g., Project Maven, a U.S. initiative to analyse large quantities of surveillance data.

- Combat Simulation and Training: Generative AI can improve military training and educational programs by creating new training materials. Eg. Training modules for Sukhoi 30 MKl aircraft.

- Prediction of Crimes and Criminal Tracking: Using Command, Control, Communications, Computer and Intelligence, Surveillance and Reconnaissance (C4ISR) Systems.

- For example, BEL developed the Adversary Network Analysis Tool (ANANT) for the prediction of attacks.

- Protect Cyber attacks: AI can detect potential threats and use predictive analytics to help predict future attacks using data analytics.

- For example, Project Seeker, developed and deployed by the Indian Army for surveillance, garrison security and population monitoring.

Issues in using AI in Defence

- Use by Non-State Actors: Criminals and terrorists can leverage the power of generative models for planned attacks. E.g; Islamic State issued a guide on how to use generative AI tools.

- Social Engineering: AI can manipulate the social media algorithm to influence target groups for radicalisation. E.g., sharing neo-Nazi AI content on social media sites such as Telegram.

- New Malware creation: Functions such as the ability to write malware may make AI dangerous in the hands of bad actors.

- For example, BlackMamba, an AI-generated malware, can evade most existing endpoint detection and response (EDR).

- No specific international law to check its limits on civilian protection and human right violations.

- AI in Surveillance and Privacy Violations: For example, China's facial recognition surveillance systems in Xinjiang to track Uyghur Muslims violating their human rights.

Ethical concerns

- Automation bias: It is difficult to differentiate between lawful targets and civilian targets leading to potential unpredictable attacks due to lack of data.

- Principle of proportionality: These systems would need qualitative analysis to judge whether an attack carried out against a lawful target would be considered proportionate.

- Predictability of an autonomous system: If a weapon cannot be controlled due to inability of an operator to understand the system these can violate international humanitarian rules.

- Objectification of human targets: The integration of AI-enabled weapon systems facilitates the swift attacks leading to heightened tolerance for collateral damage.

Indian Initiatives in adopting AI in defence

Steps taken Internationally to regulate AI in defence

|

Way Forward

For national security

- Intelligence Surveillance and Reconnaissance (ISR): The Indian military needs to build working relationships with the private technology sector in India working in the AI space like the US and China.

- Develop both offensive and defensive cyber-war capabilities: As cyber warfare becomes faster, more sophisticated, it becomes necessary to both protect and counterattack.

For International regulation

- Need of International law: To limit the types of targets, the geographical scope, and the context in which they would be employed.

- Arms control regime on AI: States and regional organisations can bring AI weapon systems and industry under arms control regime.

- Identify principles for responsible military use of AI: Codify such principles in official documents through collaborative multilateral processes.