Introduction

Brain or Neuro technology is redefining what it means to interact with the nervous system. The field is expanding rapidly, driven by breakthroughs in artificial intelligence, wireless implants, and minimally invasive surgical techniques. Alongside unprecedented therapeutic benefits, this technological revolution introduces new ethical and legal challenges, particularly around mental privacy, data rights, and cognitive autonomy.

What is Neuro-technology?

- About: It is a technology that enables direct interaction between the human nervous system including the brain and digital or mechanical systems. E.g. Brain-computer interfaces (BCIs).

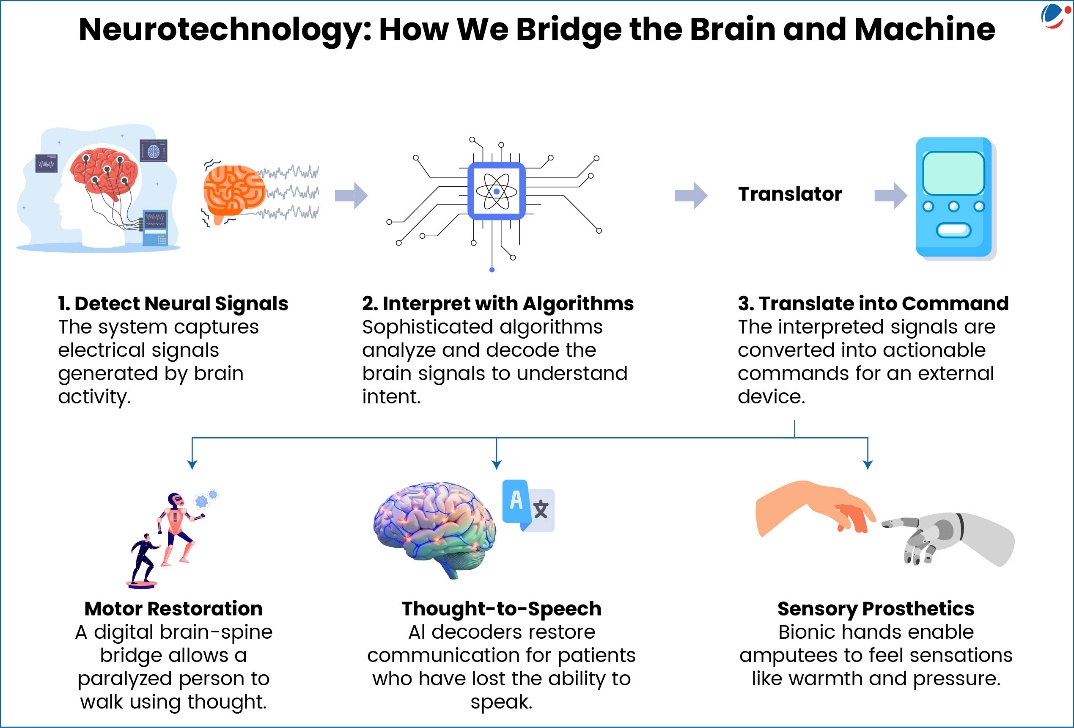

- Mechanism: These systems operate by detecting neural signals, interpreting them using algorithms, and translating them into commands for devices such as prosthetics, computers, or communication interfaces.

- Applications include

- Motor restoration: Digital brain-spine bridges can enable a man with complete paralysis to stand, walk, and climb stairs using thought-driven movement.

- Thought-to-speech: AI-driven neural speech decoders can restore speech for ALS and paralyzed patients at near-conversational speeds.

- Sensory prosthetics: Osseo integrated bionic hands can enable amputees to feel warmth and pressure while reducing phantom limb pain. Etc.

- Regulation: While its medical use is strictly regulated, neurotechnology remains largely unregulated in other areas.

- Many consumers use this technology without knowing it, via common devices such as connected headbands or headphones, which use neural data to monitor heart rate, stress, or sleep.

- This highly sensitive data can reveal thoughts, emotions, and reactions, and may be shared without consent.

Stakeholders and their interests

Stakeholder | Core Interests | Ethical Dilemmas |

Patients & Persons with Disabilities | Recovery of mobility, speech, memory restoration; pain relief; improved quality of life | Risk of psychological harm, dependence on technology, affordability, misuse of neural data |

Medical Professionals & Neuroscientists | Advancement of science; new therapeutic options; professional recognition | Duty of care vs. commercial influence; consent for experimental tech |

Tech Companies & Start-ups | Innovation leadership; data ownership; profits; market dominance | Monetization of brain data; unsafe deployment for commercial gain |

Governments & Regulators | Public safety; national security; innovation ecosystem; | Balancing innovation with risk regulation; potential for state surveillance |

Military & Strategic Security Establishments | Cognitive enhancement for soldiers; brain–controlled weapons; | Escalating arms race; violation of human dignity; risks of coercive use |

Ethical Issues

- Threat to Privacy and Freedom of Thoughts: E.g. BCIs can infer cognitive states, emotions, or intentions and could influence decisions, undermine free will. Misuse could expose intimate data without consent.

- Threat to Personal identity and autonomy: Brain–computer connections and algorithms may shape thoughts and decisions. There is a risk of diluting individuality and reducing autonomy.

- Monetization of Neural Data: Corporations could collect and commercialize brain data, analogous to behavioral data today but significantly more intimate.

- Surveillance: Neuro-data could enable tracking of attention, emotions, or decision-making patterns for security by State or commercial purposes by MNCs, raising fears of manipulation.

- Dual-use Risks: Technologies developed for rehabilitation can be repurposed for military or intelligence applications, such as hands-free drone control or neural monitoring.

Way Forward (UNESCO's 2025 Key Recommendation on the Ethics of Neurotechnology)

- Cognitive Liberty and Mental Privacy: Individuals must retain self-determination over their own mental experiences and unauthorized collection, or use of neural data must be prohibited.

- Human Agency and Accountability: Neuro-devices must remain under user control ("humans in the loop").

- Protection of Vulnerable Populations: Especially children, who may not consent meaningfully.

- Transparency and Explainability: Users must understand device functions, risks, and data practices.

- Non-discrimination and Inclusion: Prevent unequal access and neuro-based social stratification in lower-middle-income countries and marginalized groups to prevent a digital divide.

- Governance and Regulatory Frameworks: Neurotechnology not to be used for surveillance and to compel individuals to testify against themselves.

- Assessment Mechanisms (on human rights, economic, etc.); Regulatory Sandboxes (for testing and validating technologies) to be developed by the state.

- States should classify neural data and data allowing mental inferences as "sensitive personal data".

Conclusion

Neuro-technology offers transformative medical benefits but raises profound ethical risks. However, at the same time, ensuring mental privacy, human autonomy, equity, and strong global regulation is essential to harness innovation responsibly while safeguarding dignity and fundamental rights.

Case Study Practice QuestionA start-up called NeuroLink Solutions has developed a non-invasive brain–computer interface (BCI) that can help individuals with paralysis move their limbs using thought patterns. The device has shown remarkable results in clinical trials, enabling users to regain mobility and dramatically improving their quality of life. The company now plans to commercially launch the product within six months, priced at ₹38 lakh per device. It is also finalising agreements with two global tech firms to access and monetise anonymised neural data collected from users to "improve AI algorithms." Users will have to agree to data-sharing as part of the product terms. A senior neurologist, Dr. Meera, a respected figure in the medical community, has joined the start-up as a scientific advisor. She believes that the BCI will be transformative for patients, but she is increasingly uncomfortable that:

Meanwhile, the parents of a 12-year-old child with paralysis, desperate for a cure, are pressuring Dr. Meera to approve early access to the device for compassionate use, even though the child cannot meaningfully provide consent. The CEO insists that commercialization and large-scale data partnerships are essential to "remain competitive and accelerate innovation before rivals catch up." Answer the following questions:

|