Why in the News?

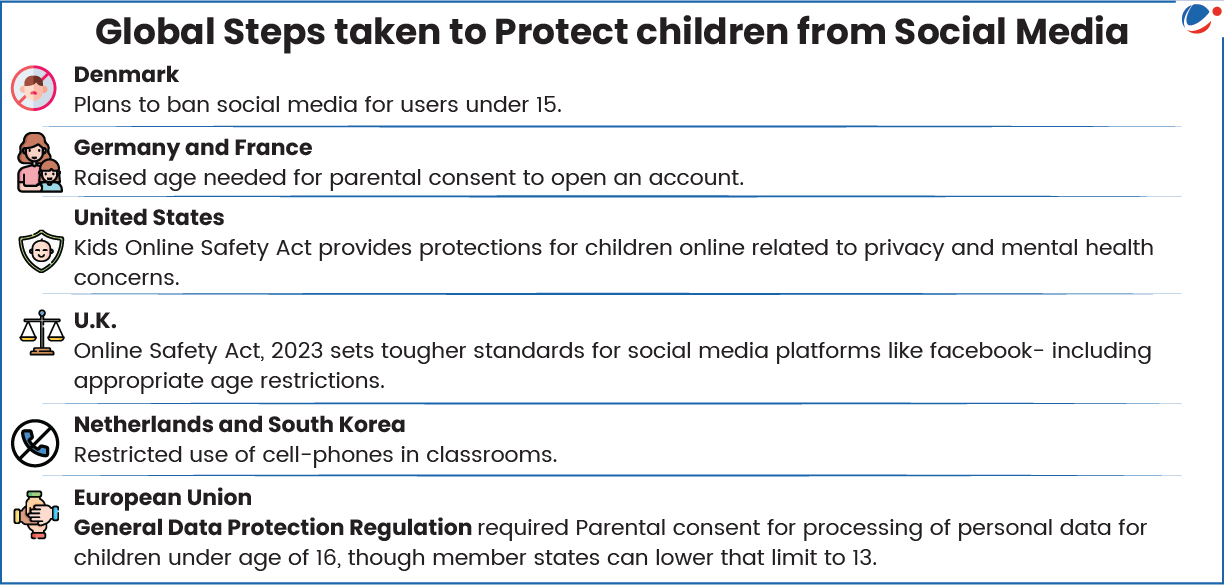

Recently, Australia has become the first country to enforce a nationwide social media ban for children under 16 under the Online Safety Amendment (Social Media Minimum Age) Act 2024.

More on the News

- Ban initially covers 10 platforms including Facebook, Instagram, Snapchat, Threads, TikTok, X, YouTube, Reddit and streaming platforms Kick and Twitch.

- Age-Restricted Social Media Platforms (ARSMP) would cover an electronic service which enables online social interaction between two or more end-users, requiring them to prevent underage users from creating or keeping accounts.

- Companies are responsible for ensuring children under the minimum age cannot access their platforms.

Need for Banning Social Media for Children

- Cognitive Development: Increased use of social media by children hampers their cognitive processes such as weakens concentration levels, ability to learn and retain information, thereby affecting school performance.

- Mental Health: Children addicted to social media often show increased rates of anxiety, depression, low self-esteem and emotional self-efficacy, and increase cases of Attention Deficit Hyperactivity Disorder (ADHD – a neurodevelopmental disorder).

- Physical Health: Exposure to certain content promotes sedentary behavior, eating disorders and unattainable beauty ideals leading to issues like disrupted sleep patterns, obesity etc.

- Social development: Constant use of the virtual platforms reduces face-to-face interactions leading to social isolation, strained family relations and inability in emotional regulation.

- Online safety concerns: Social media makes children susceptible to online abuse such as cyber-bullying, exploitation and exposure to harmful content and online sexual predators.

- Dangerous Viral Trends: Social media risky viral challenges like "Blackout Challenge" (breath-holding) and "Devious Lick" (stealing) can result in injuries, legal trouble, and disciplinary action, particularly among children and adolescents.

- Parental supervision gaps: In urban areas, dual-working households, limited parental time and supervision have contributed to excessive screen exposure, often described as the "iPad kid" phenomenon.

- Algorithmic manipulation: Engagement-driven algorithms personalise content to maximise screen time, making it difficult for children to disengage and increasing the risk of overuse.

Concerns associated with Banning of Social Media for Children

- Age verification and privacy risk: Social media Platforms have not clearly disclosed reliable methods to verify users' age, raising risks of loopholes and evasion by underage users through use of virtual private networks (VPNs).

- Further, proposed verification tools such as government IDs, facial or voice recognition, or age inference—may compromise user privacy and data protection.

- Risk of over-regulation: Gaming and communication platforms like Roblox and Discord fear being targeted, leading to restrictive measures that may affect legitimate users.

- Rising unsafe spaces: Banning social media is hard to enforce and may push children to unsafe spaces on internet like the Dark Web.

- Human rights infringement and exclusion: Banning social media can restrict freedom of expression and access to information, and potentially exclude vulnerable groups including LGBTQIA+ youth who rely on online communities for support.

- Limit development of digital skills: Limits positive impacts of using social media such as creative expression, collaborative learning via educational content, interest-based networking, etc.

India's initiative to protect children on Online Platforms

|

Way Forward

- Child-Centric Digital Governance: Governments, regulators, tech firms, parents must jointly create safe, inclusive digital spaces, redesign platforms around child safety, and enforce safeguards like age-appropriate design, privacy-by-default, and algorithmic accountability.

- Example, UK Age Appropriate Design Code.

- Strengthen redressal mechanisms: Empower Child Helpline 1098, appoint technically trained cyber nodal officers in state police, and develop panic-button tools like POCSO e-box for rapid reporting and enforcement of online threats to children.

- Improving Digital Skills and Education: Educate children and parents about responsible online behavior, digital literacy, and self-regulation.

- Example, Kerala's Digital De-Addiction (D-DAD) centres offer free uthorizati for kids struggling with digital addiction.

- Awareness: Under programmes like NIPUN Bharat, Digital India, digital literacy campaigns in schools and communities empower children, parents, and teachers to recognise and mitigate online risks.

- Parental Involvement and Control: Parents should set up social media accounts jointly with children to ensure strong privacy settings, passwords, and use screen-time management tools like Google Family Linkto set limits and monitor digital habits across devices.

- Accountability for Tech Companies: Instead of banning, tech companies can be held accountable for creating safer, child-friendly spaces. Social media platforms like Meta set age limits (13+) to ensure children's safety online.

Conclusion

While protecting children from the harms of unregulated social media is imperative The way forward lies in a calibrated approach that combines child-centric digital governance, robust regulatory oversight, parental involvement, and accountability of technology platforms. By prioritising age-appropriate design, digital literacy, and safe online spaces, societies can ensure that children are shielded from risks without being excluded from the positive and empowering potential of the digital world.